We’re building something that hasn’t really existed before: a way for autonomous agents to be accountable for what they do, how they decide, and the impact they leave behind. And we’re building it in the open, where every design choice, experiment, and correction is visible. That’s deliberate. Accountability only works when the system itself is observable.

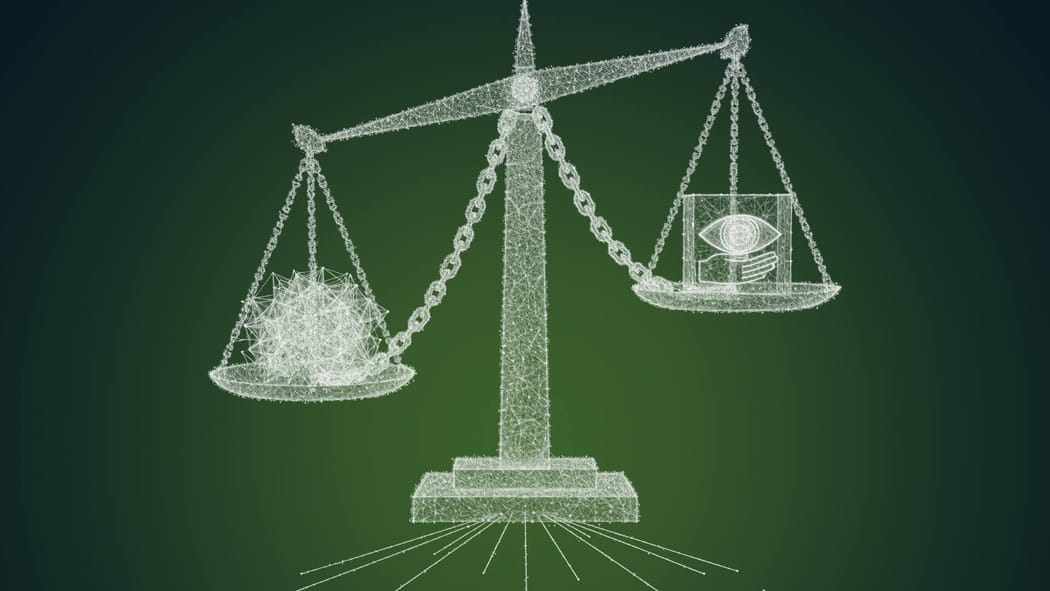

Here’s the simple idea underneath all of this: if AI is going to take actions on behalf of people, organisations, and whole communities, then those actions need to be traceable, verifiable, and governed through shared rules that no single actor can quietly override. Not controlled in a heavy-handed way, but held in relationship...

with checks, balances, and the kind of feedback loops that make cooperation safe.

This framing sits at the core of what we’re building across the entire portfolio of IXO-powered platforms, using our Qi cooperating system.

We’re not building “AI assistants”.

We’re building accountable agents that, like employees or contractors, can show their work.

The shift from intention to verifiable action

Most systems today capture task-driven intent such as "Research a topic", "Craft a message", "Generate an image". But they rarely express intent as an outcome to be achieved, or verify whether that intent was carried through faithfully, or evaluate why an agent took the turn it did to deliver its results.

Agentic systems raise the stakes: agents act continuously, across multiple contexts, with delegated authority. Without visibility into their reasoning, evidence, and state transitions, you’d never know whether they followed the rules, improvised safely, or drifted off course.

So we’re designing explicit checkpoints inside every flow:

- why a specific goal was pursued

- who was authorised to act

- what capability was delegated

- what evidence the agent used

- how it justified the action

- what outcome it produced

- whether the outcome met agreed criteria for success or failure

And all of this becomes part of a persistent record: cryptographically signed, linked to state changes, and anchored to a publicly verifiable record on-chain.

This offers a new kind of auditability that is much finer-grained and connected to reality than what we see with traditional logs or compliance reports. It becomes agentic accountability.

Building the infrastructure for accountable autonomy

Three core components make this possible.

1. Shared state with traceable transitions

Every human or agentic action updates a flow state encoded in a realtime record (CRDT) and gets mirrored across federated and sovereign Matrix rooms. Nothing is hidden. Nothing disappears. Each edit is attributed, timestamped, and linked to the capability that enabled it.

An agent can’t “move the workflow along” unless the previous steps were verifiably completed, based on an agent's claims. Flow nodes become contracts, not suggestions.

2. Delegated capabilities with UCANs

We treat authority as something that can be delegated, scoped, and revoked. Not role-based access control. Not trust by default. The question becomes:

- Which capability was the agent given permission to use, for which object, and under what conditions?

Every action traces back to an explicit user-controlled authorisation network (UCAN) delegation.

This makes responsibility legible. It also makes misalignment visible.

3. Outcome verification through claims and credentials

Actions that produce measurable results → evaluations, predictions, decisions, data transformations, which are expressed as verifiable claims. These can be checked by independent oracles, validated by causal or statistical tests, and anchored on-chain so they cannot be rewritten later.

This closes the loop of intent → action → evidence → verification → state update → agent payment.

It also means you can run entire cooperation workflows where every step is independently accountable.

Why we’re building this in public

We’re not simply shipping features. We’re shaping norms.

If we try to build an accountability layer behind closed doors, we’ll replicate the same patterns that centralised technology companies created: invisible decision-making, unexplainable failures, unpredictable behaviour.

By building in public, three things happen:

- Design becomes an open conversation. Our community sees the trade-offs, the edges, the places we’re unsure, and the reasoning behind decisions.

- Missteps get corrected early. Transparency is an early-warning system.

- The norms become shared property. Accountability is only meaningful when everyone understands how it works and can participate in shaping it.

This is also how trust is formed. People trust what they can inspect, not what they’re told to believe.

Where this leads

Agentic accountability isn’t a compliance requirement. It’s a precondition for voluntary intelligent cooperation at scale.

Real Intelligence Is a Multi-Player Game. For too long, we’ve treated intelligence as something that happens inside—inside a person, inside a lab, inside a model. But real intelligence emerges between things. Between people. Between machines. Between intentions that choose to… pic.twitter.com/0qJeHb9IMB

— Shaun Conway 🦋 (@shaunbconway) October 6, 2025

When humans and agents work together, they need a shared language for:

- authority

- evidence

- justification

- responsibility

- correction

Our infrastructure turns these from abstract principles into executable protocols. The system doesn’t ask you to trust the agent.

It gives you the proof.

And ultimately, the goal isn’t to constrain agency. It’s to make it dependable. When autonomy is paired with accountability, agents stop feeling like black boxes and start feeling like collaborators.

A question to carry forward

If agents can now justify their actions, verify their outcomes, and expose their reasoning—how will this change the way people expect accountability from human systems too?

That’s the future we’re stepping into, one transparent iteration at a time.

To see our flow, and the verifiable claims of what we are building in public, check out: