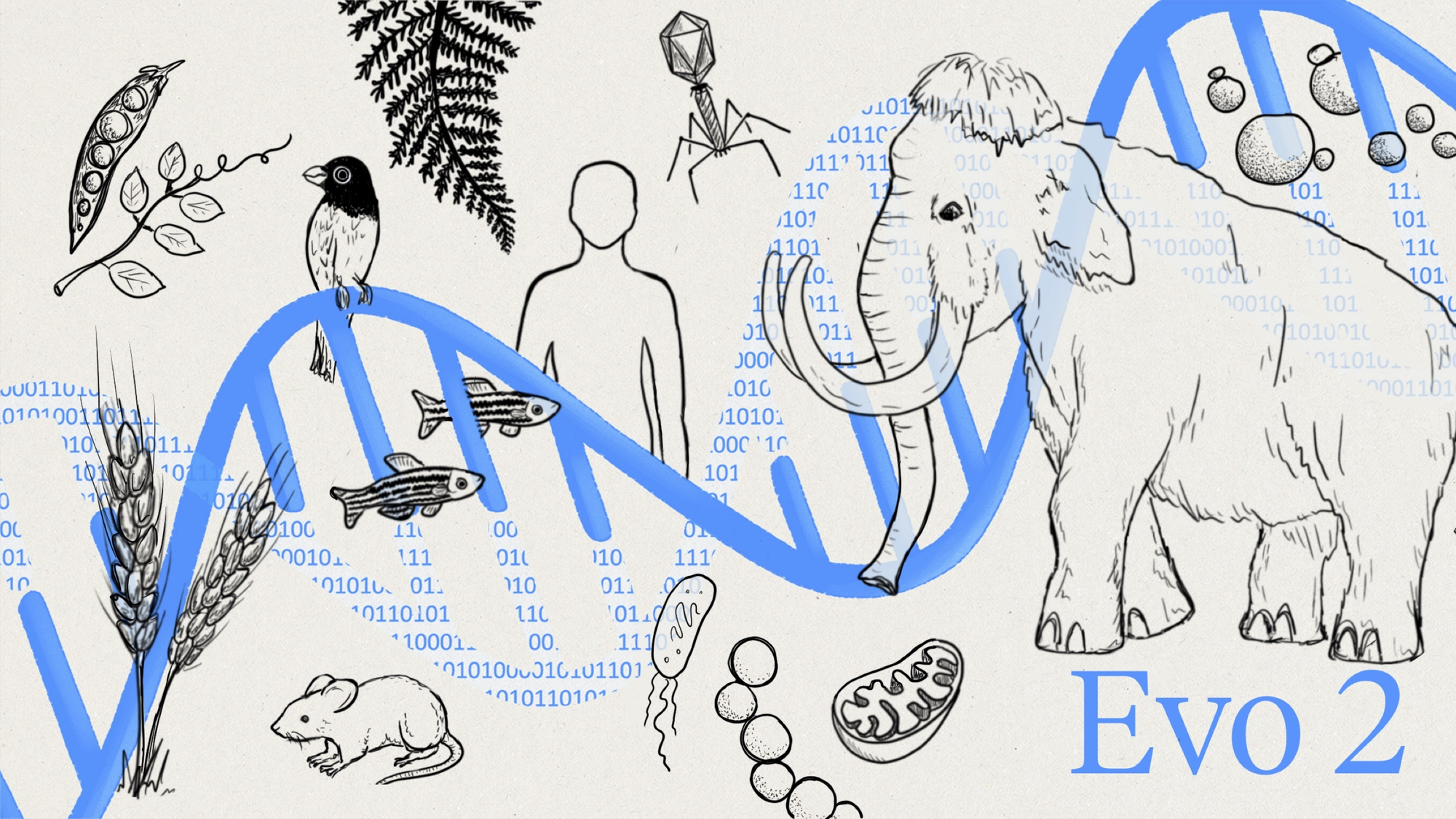

Genomic data with AI transforms how we understand and use the code of life. All of life encodes information with DNA. Now we can even generate this code for novel biological systems—in much the same way as we use LLMs to code software applications.

The Code of Life

Arc institute just released Evo-2, a state of the art DNA language model that has been trained on 9.3 trillion DNA base pairs from a highly curated genomic atlas spanning all domains of life. This AI model can identify disease-causing mutations in human, animal, and plant genomes. It can also be used to generate novel designs for biological systems. Advancements such as this offer immense potential for accelerating vaccine and therapy development, and for enhancing pandemic prediction and prevention.

Black and White

Foundation models such as Evo-2 are black boxes in the sense that they learn complex patterns from vast data without explicit human-defined rules. Their strength lies in prediction—detecting subtle genomic patterns or proposing novel genetic designs that no human could intuit. For high-stakes domains like healthcare or biosecurity, we need more than just accurate predictions; we need to understand why an AI recommends a certain action and to trust that recommendation enough to act on it.

This is where white-box causal models come in. By integrating AI’s pattern-finding prowess with models that capture cause-and-effect, for example—how a genetic change causes a disease, or how an intervention would impact an outbreak—we get the best of both worlds. Purely predictive AI without causal insight can lead to serious mistakes in policy and healthcare, because trying to simply predict human behaviour or disease risk can mislead interventions unless we identify the underlying causes. We need AI systems that explain why something happens to find the most effective levers for change.

Coupling predictions with causal reasoning means that our outputs translate into real-world action plans. For instance, we can identify which vaccine candidate might work and the biological mechanism by which it would neutralise a virus. This makes our AI insights immediately practical and testable.

By marrying black-box and white-box approaches, we can generate actionable intelligence, not just theoretical insights.

Using white-box models such as a disease spread simulator or gene network model, we can trace how we arrived at a recommendation. Stakeholders can then inspect the cause-effect pathways, building confidence in the result.

Decisions grounded in models of causality enable us to verify AI predictions against observed real-world outcomes. We must be able to continuously measure, report, and verify whether an intervention work as we expected. This is the classic cybernetic loop of sense→decide→act→learn in which systems integrate machine intelligence with human oversight through feedback loops. This enables AI to be used with scientific validation and to be regulated with evidence for accountability.

When lives depend on it we must be able to explain “why” as well as “what” to do, when making AI-driven decisions.

The team behind Evo-2 released their model together with tools to help interpret its inner workings—which is a step toward opening the black box. A mechanistic interpretability visualiser reveals what features Evo-2 is recognising in DNA. Evo-2 acts as a powerful prediction engine, while scientists apply their domain knowledge—which we can consider a form of causal reasoning—to validate and build on its findings. The result is AI that doesn’t just churn out genomic insights, but does so in a way that researchers can trust and act upon.

Feedback Loops

Building a truly cybernetic system—one that intelligently links decision to actions with measured feedback—requires more than technical precision: It demands robust governance and accountability at every step. Consider who is responsible if an AI-driven recommendation turns out wrong or harmful and how we can ensure that actions performed with AI align with ethical norms and public interest.

A key principle is to keep humans (or community) in the loop for oversight. As AI agents are becoming more autonomous, there must be clear checkpoints where human experts or stakeholders review decisions, especially in healthcare or public policy settings. For example, an AI might flag a new pathogen outbreak and even suggest containment measures—but public health officials should verify the data and approve the response plan. This kind of oversight is greatly aided by transparency: we need to see why the AI is suggesting certain actions—hence the importance of the explainability discussed above—and track what data it used. This is where we believe the IXO protocols and systems we have developed for validating claims and to measure, report and verify outcomes, could hugely contribute towards making powerful AI more feasible to use in high-impact, real-world applications.

Accountability means that AI systems should keep detailed, tamper-proof logs of their decisions and the outcomes of those decisions. If a mistake happens, we should be able to audit the chain of events and identify the cause–much like a black box flight recorder in aviation. Enterprises, governments and regulators are increasingly demanding auditability and oversight for the responsible use of AI. Blockchain technology offers a promising solution: immutable ledgers can record proofs at every stage of an AI’s development and deployment, making it possible to verify what is done with AI and to track the results of using AI. With IXO we are enabling networks of AI agents to operate with mechanisms that make them well-governed, safe, auditable, and accountable by design. In practice, this means any data-driven claim or action an AI agent makes—say, an oracle predicting a disease surge—can be traced, verified by independent parties, and approved through democratic processes—such as a DAO of experts—before resources are mobilised.

Here governance is not intended to constrain innovation, but rather to close the feedback loop. In a cybernetic sense, governance provides the corrective feedback—if an AI’s action leads to unintended effects, accountability mechanisms detect it—through impact measurement, reporting, and verification—enabling the system to learn and adjust. Much like a thermostat correcting a ventilation system when a room gets too hot or cold. By measuring real-world impacts with high-definition data—which is a core focus of the IXO Internet of Impacts approach—we ensure the AI’s decisions actually achieve the intended positive outcomes. Impact measurement becomes the learning signal in the AI’s loop, telling us whether the AI-driven action made things better and informing the next cycle of decisions. This relentless focus on measurable outcomes and accountability transforms an AI from an oracle in an ivory tower to a responsible agent in society. This also enables outcomes to be financed—including with novel mechanisms.

Threats and Opportunities

The power to read and write the code of life comes with profound ethical dilemmas. Nowhere is this more evident than in pathogen genomics. These powerful new AI systems can potentially be used to design novel viruses or to enhance the pathogenicity of microbes—capabilities that could be double-edged. Given the ethical and safety implications, the Evo-2 team deliberately excluded from their training data the genomes of pathogens that infect humans or other complex organisms. In other words, Evo-2 was not taught on the DNA of known deadly viruses or bacteria, and the model has been programmed not to output functional sequences for potentially harmful agents. This decision provides a striking example of self-imposed limitation in the name of safety: the researchers drew a line to prevent the AI from becoming a facile tool for creating potentially harmful pathogens.

This choice highlights the dual-use dilemma in AI-genomics. On one hand, including deadly pathogen data could help the AI detect dangerous mutations or even help in designing countermeasures—such as vaccines and antibodies—which would clearly be beneficial for public health. On the other hand, a bad actor or even an accidental misuse of a fully uninhibited model could ask the AI to generate a superbug. The Evo-2 team prioritised the precautionary principle: don’t arm AI with knowledge that could be misused with catastrophic consequences. But isn't this just kicking the ethics ball down the road?

Who decides what counts as “too dangerous” for an AI to know? Should there be international standards for such exclusions—much like there are treaties for bioweapons? If one research group holds back, will another elsewhere push forward without restrictions?

These questions underscore why we need governance frameworks and global consensus in AI-driven genomics. It’s not enough for one team to act responsibly; the community as a whole should establish norms—and possibly regulations—on AI research involving pathogens, data sharing, and publication of results. We saw during the COVID-19 pandemic how open genomic data sharing accelerated vaccine development, but also how debates raged over “gain-of-function” research. With AI automating parts of this work, those debates intensify. Ensuring ethical guardrails means involving ethicists, policymakers, and the public early in the development cycle. Evo-2’s filtered training set is an early example of ethical design choices. Going forward, initiatives such as ours, to build a global “Digital Immune System for Humanity” must bake in the necessary guardrails at a systems level—by combining verifiable data, the right incentives, and appropriate oversight mechanisms—to stop disease threats while avoiding doing harm.

Decentralising Ownership and Control

Decentralised protocols implemented using blockchains enable new governance models for AI, and a whole lot more. Smart contracts can enforce rules–for example, not allowing an AI agent to execute certain actions or access certain data unless predefined conditions or approvals are met. Decision rights and oversight roles can be distributed among a consortium—scientists, health agencies, communities—rather than resting with a single authority or the AI developers alone. This democratises control and oversight, ensuring no rogue actor—whether human or AI—can intentionally or unintentionally skew outcomes. If something goes wrong, the incident is logged indelibly, and responsibility can be assigned and reviewed.

Blockchain-based digital public goods infrastructure allows multiple parties across countries and institutions to contribute data and AI models in a shared network without centralised ownership. In a field like pathogen genomics, this is game-changing. Data from labs around the world can be contributed as verifiable credentials on-chain, and AI models can consume this global data soup to improve their predictions. Importantly, contributors can be rewarded—with tokenised payments or reputation points—for providing high-quality data or model improvements, which creates incentives for collaboration. This approach aligns with the FAIR data principles—Findable, Accessible, Interoperable, Reusable—that global health experts champion for pathogen surveillance. It turns AI development into a collective, open endeavour rather than a siloed competition. And because the digital public goods that get created are added to the network, each improvement or discovery becomes part of a permanent, reviewable knowledge base for all of humanity, rather than sitting in someone’s lab notebook.

At IXO we have been essentially building a trust layer for impact-driven AI. The IXO Platform provides protocols and infrastructure for interconnected networks of agents to operate with confidence in data veracity and governance. In practical terms, imagine an AI oracle that scans genomic sequences for dangerous mutations—operating as a digital pathogen sentinel. This oracle could publish its findings as claims to the blockchain, where they’re time-stamped and signed. Other agents or experts could then verify these claims—for example, cross-checking with laboratory results—before any alert is escalated. If the claim is confirmed, a smart contract can automatically trigger funding for a response and provide alerts to relevant authorities—with each step executed transparently and according to agreed rules. Throughout, anyone with permission can inspect the trail: the raw data, the AI model’s analysis, the verification, and the actions taken. Trust is engineered into the system, rather than being an afterthought.

Evolving Our Digital Immune System

Looking ahead, the convergence of Evo-2-like AI models, ethical design, and blockchain governance points toward a new paradigm for how we tackle global challenges. Nowhere is this more exciting than in pathogen genomics, where speed and trust are literally a matter of life and death. The work we are now doing in collaboration with leading global institutions exemplifies this paradigm in action, as we are continue to build towards the vision of a global digital immune system for humanity that is augmented by AI, to sense pathogenic threats in real-time, analyse them with AI—grounded in explainable models, and coordinate responses through transparent, accountable networks of stakeholders.

This aligns closely with initiatives like the WHO’s International Pathogen Surveillance Network, which calls for international collaboration, data sharing, and equity in genomic surveillance. What IXO adds is the technological backbone to make such collaboration trustworthy and efficient. Through our blockchain-based Internet of Impacts platform, we can collectively ensure that pathogen genome data and AI analyses are shared under a common trust framework, where each participant—whether a national public health lab, a research university, or an AI model provider—plays by the same verified rules. Equitable access is a core part of the vision—every country’s health system, not just the richest, should benefit from the AI insights and contribute data to improve them. A decentralised network makes this possible, preventing gatekeeping of life-saving information.

Imagine how a model such as Evo-2 can now be deployed for antimicrobial resistance or pandemic early warning. The model’s recommendations—say, identifying a novel virus strain that looks poised to jump to humans—would be vetted through a white-box epidemiological model and then recorded on-chain as a risk signal. Global agencies subscribed to this feed would immediately get an alert, with the assurance that the alert has been explainably generated and independently verified. If false, the network’s feedback mechanisms would quickly suppress it—avoiding false alarms; if valid, coordinated action could kick off even before an outbreak spreads. All of this would happen with clear accountability—from the data sources to the AI model version to the decision logic—mitigating the political and public skepticism that often hampers swift response.

Towards Super-Intelligence

We can see how the fusion of advanced AI and rigorous governance has the potential to create intelligent cybernetic systems that could profoundly improve how we solve complex biological and societal challenges. By combining black-box models like Evo-2 with white-box causal reasoning, we get AI that is both smart and wise—delivering insights we can trust. By embedding these AI agents in frameworks of accountability and using blockchain as the connective tissue, we ensure that this intelligence operates in service of humanity, not in a void.

We hope that our pioneering efforts in AI and pathogen genomics will show how open, transparent, and collaborative approaches can transform isolated algorithms into part of a living, learning system—one that is continually sensing, deciding, and adapting in pursuit of global health and well-being. This forward-looking model doesn’t just react to problems; it anticipates and prevents them, learning from every outcome. In short, this is the blueprint for high-trust AI in the real world—a digital immune system for humanity that evolves and improves with each interaction, sensing threats and effectively responding with precision, while respecting our human values.

Connect with IXO World